I would like to start this week’s new edition of Security Week with news that has nothing to do with information security. Volkswagen diesel vehicles happened to be emitting far more contaminants than shown during the tests.

Before we see the full picture, I would stick to this statement and not get overly judgmental. This story proves that the role of the software in today’s world is paramount: it turned out that a small tweak in the code is enough to alter a critical feature in a way no one would notice.

According to Wired, it was quite easy to mask higher emission levels. Do you know how the lab testing is performed? A car is placed on a device that mimics road driving, the accelerator is pressed, the wheels are roll, and the exhaust emission are then analyzed.

What’s the different between this lab test and the actual driving? The steering wheel is not in action. That means, this could be programmed as a single condition: when no one is steering, that means we are on the MOT station. This hack could be discovered by accident, which is what I think ultimately happened.

Volkswagen says emissions deception actually affects 11 million cars http://t.co/8Z0MMR6kBO

— WIRED (@WIRED) September 23, 2015

Again, unless the story is fully clarified, this difference in exhaust emissions analysis might be considered both intentional, with the blame to be placed on the car maker (or a group of people responsible for the code), and unintentional. Could it be a mistake? It could, for sure. In todays weekly digest by Threatpost.com we will cover the stories about various mistakes in coding, and how they can be used for different purposes, including earning money. Check here for more stories.

D-Link exposed its own digital certificates by mistake

News. A detailed research published on the Dutch website Tweakers.net, generously adorned with a lot of GoogleTranslate-ing.

Imagine you are a producer of various networking appliances, ranging from routers to surveillance cameras. You accompany your equipment with firmware, drivers, software, firmware update software, driver update software, etc. It is all stored in some “Workflow” folder on a secret server. Everything is updated, distributed, uploaded to update servers according to the schedule, then the updates are flagged, and, ultimately, users get their updates. Smooth, eh?

D-Link Accidentally Leaks Private Code-Signing Keys – http://t.co/0mmP6Mm5wv

— Threatpost (@threatpost) September 18, 2015

It goes without saying that one cannot manage the entire hardware inventory manually, as this is the work for scripts. See, we got a fresh piece of code, ran a .bat file (Shell script, or Python, or whatever), then the code is distributed among designated folders, everything is archived – and everyone is happy.

And this is Jack the Engineer, who decided to radically improve the script, has tested it in a couple of instances, and got pretty satisfied with the result. And this is the bug Jack built. Just one line of the code responsible for selecting appropriate update files or folders just did not include one little dash or an extra parenthesis – and behold the data you held dear, has now been leaked on to public domain and being mailed to countless users.

Security Week 39: #XcodeGhost, the leak of #D-Link certificates, $1M for #bugs in #iOS9

Tweet

Well, of course, I don’t claim my take is 100% accurate – it might be not. But here’s what happened: a cautious user, who had just downloaded the firmware update for his D-Link camera, noticed that the archive contained private keys to the vendor’s software. There were a number of certificates in the archive, some of them outdated, but one of them expired only on September 3rd – not too long ago.

But before that date, the key had remained exposed for six months and could have been used to sign any software – including, consequently, malware. It was an obvious mistake, shameful and dangerous. We are now living in an era when a line of 512 numbers may include anything: a key necessary to infect millions of computers, a pile of money in virtual currency, or an access code to classified information.

But at the same time 512 bytes is a tiny grain of sand in an ocean of your hard drive, susceptible to being wiped out into the public domain. The only hope we have is that no one had noticed the failure – which is frequently true. But at times someone may find the flaw – like in this case, although no one managed to find malware, which leveraged any of the exposed keys just yet.

XcodeGhost a bookmark in Apple’s IDE

News. The research by Palo Alto. The list of affected apps. The official statement by Apple (in Chinese, to our dismay).

Imagine you are a Chinese iOS app developer. Nothing particular to imagine here, though: developer kits and tools are the same for all developers from any country. Just let’s add a sprinkle of geographical peculiarities. Say, you buy a brand new Mac, install Xcode framework and start coding.

It’s totally fine, except one thing: when taken from the official Apple website, the free Xcode framework is downloading at a turtle’s pace – thanks to the Great Firewall. It’s way faster and simpler to download it from a local website – anyway, what’s the difference? The thing is free anyway.

Then, totally out of the blue some apps (both popular ones and not such) have a malicious injection in the code, which (at least!) sends data about the device to a remote C&C server. In the worst-case scenario, the device accepts commands from the server, which compromise the user and exploit iOS vulnerabilities.

It turns out that the malicious embedding was present in the local versions of Xcode and several releases were affected. That means someone created those embeddings on purpose. It proceeds to get even worse: it’s not about some popular apps like the WeChat IM or the local Angry Birds version being compromised, it’s about a malicious code having been able to go through App Store’s moderation.

Of course, by now all infected apps have been removed, and the embedding itself is pretty easy to find by the C&C domain names (those got blocked, too). But without prior knowledge the embedding is pretty hard to find: it was elegantly hidden in Apple’s standard libraries, which are used in 99.9% apps.

Allegedly 40 apps on App Store are infected https://t.co/UTSGwvWccj #apple pic.twitter.com/moLosQwB9V

— Kaspersky Lab (@kaspersky) September 23, 2015

There is one curious thing. The Intercept, which specializes on de- classifying secret data available in Snowden’s revelations, reports that the method, which XcodeGhost uses to infiltrate Apple devices, matches the method described in the government’s secret plans. The reporters state they went public with this information as long ago as last March, boasting about it.

More #XcodeGhost news: Potentially thousands of iOS apps infected, modded versions date back to March: http://t.co/XaHXORV07B

— Threatpost (@threatpost) September 23, 2015

Well, we’d boast back, saying that we were first to notice, even before Snowden happened, back in 2009. For instance, we found malware, which infected Delphi’s IDE and inserted a malicious embedding into all compiled apps. The idea is just obvious. But, again, once you know about the problem, you can get rid of it easily – just match the integrity of the development tool with the master version.

And, besides, one unbelievably simple recommendation here: never download software from questionable sources. It even sounds quite odd, as we are taking about experienced developers here. Can they really make this mistake? Well, as it turns out, they can.

Bug broker announced a $1 million bounty for bugs in iOS 9

News.

There is an interesting table about the history of jailbreaking various iOS devices. Unlike Android or desktop operating systems, where the holistic control over the system is, generally, enabled by default an thus exploited quite easily, Apple’s smartphones, tablets, (and now also TV set-top boxes and smart watches) have inherently limited user permissions.

It’s been seven solid years the renowned vendor has been trying to fence protect its devices from one end, and the rooting enthusiasts trying to bypass this protection, from the other end. In the end, most Apple devices have been jailbroken (except Apple TV v3, and, at least for now, Apple Watch) – usually the timing ranges from one day to six month after the product hits the shelves.

.@Zerodium Hosts Million-Dollar iOS 9 Bug Bounty – http://t.co/tQ9Evs3iTi

— Threatpost (@threatpost) September 21, 2015

The newest iOS 9 has been jailbroken, but at the same time it has not. It was not fully jailbroken. The one to ‘take care’ of this problem is Zerodium, which has already announced a $1 million bounty for the method of exploiting iOS 9, provided that:

— The exploit is remote (so it runs automatically when a user visit a specially crafted web page or reads a malicious SMS or MMS);

— The exploit allows to side-load arbitrary apps (the likes of Cydia);

— The exploit is persistent enough to continue operating after reboot;

— The exploit is ‘reliable, discreet and does not need any action from the user’.

This list proves that Zerodium does not have any plans to waste $3M (this is the total amount of the bounty) just to please rooting enthusiasts. Chaouki Bekrar, the founder of Zerodium, was initially the founder of VUPEN. The latter specialized on selling vulnerabilities and exploits to state actors. It’s a murky grey zone of information security world, both from the legal and moral stand points: good guys prosecute bad guys with the bad guys’ own tools.

Whereas VUPEN (at least, officially) was not messing around with bug brokerage, Zerodium was initially created for this purpose. That means someone would ultimately hit the jackpot, someone would be breaching devices with the purchased exploit and not disclosing details on vulnerability (otherwise what’s the point in paying the bounty?).

But we already know what happens when bug brokers get hacked themselves (remember Hacking Team, when a number of 0-days ended up in public domain, and a lot of nasty business between the company and some Middle East states got uncovered, and, anyway, that was just one big fiasco.

Hackers Release Hacking Team Internal Documents After Breach – http://t.co/NIsqv96Ctc

— Threatpost (@threatpost) July 6, 2015

The moral here is that should someone have an urge to jailbreak their smartphone, they are totally eligible to do that (or, more accurately, not that totally). But jailbreaking someone else’s device without its owner’s consent is not good, and once such opportunity is discovered, this should by all means be disclosed to the vendor and patched. Anyway, exploiting for the greater good is still exploiting.

What else happened:

Adobe patched 23 vulnerabilities in the Flash Player. 30 more bugs were patched in August.

Huge Flash Update Patches More Than 30 Vulnerabilities – http://t.co/45seXMk5qT

— Threatpost (@threatpost) August 11, 2015

OPM reported even more severe consequences of the massive breach that happened this year: over 5.6 million fingerprints belonging to federal agencies employees were compromised. Home analysts note that your finger is not that replaceable as a compromised password, and the number of combinations is really limited. Now, hardly anything can be done about the breach, but no one knows what the tech of the future would be capable of.

Reminder to change your fingerprints often. Use a fingerprint manager and don't reuse the same fingerprint. https://t.co/IMkEyslAph

— Jonathan Matthews (@jpluscplusm) September 23, 2015

Oldies:

“PrintScreen”

A very dangerous virus which occupies 512 bytes (one sector). It infects the boot sector of floppy drives and hard drives when reading (int 13h). The old boot sector writes at the 1/0/3 address (side/track/sector) on a floppy drive, and at the address 3/1/13 on the hard drive. It can corrupt one of the FAT sectors FAT or stored data (depending on the capacity of the drive). When infecting a hard drive, it implies that its boot sector is stores at the 0/1/1 address (which proves low level of proficiency of the coder who created the virus).

It also hijacks int 13h. Judging by the listing of the virus when infecting the drive with 1/256 probability rate (depending on the value of its internal counter), the virus should call int 5 (Print Screen), but due to a mistake the new value of the counter is not saved.

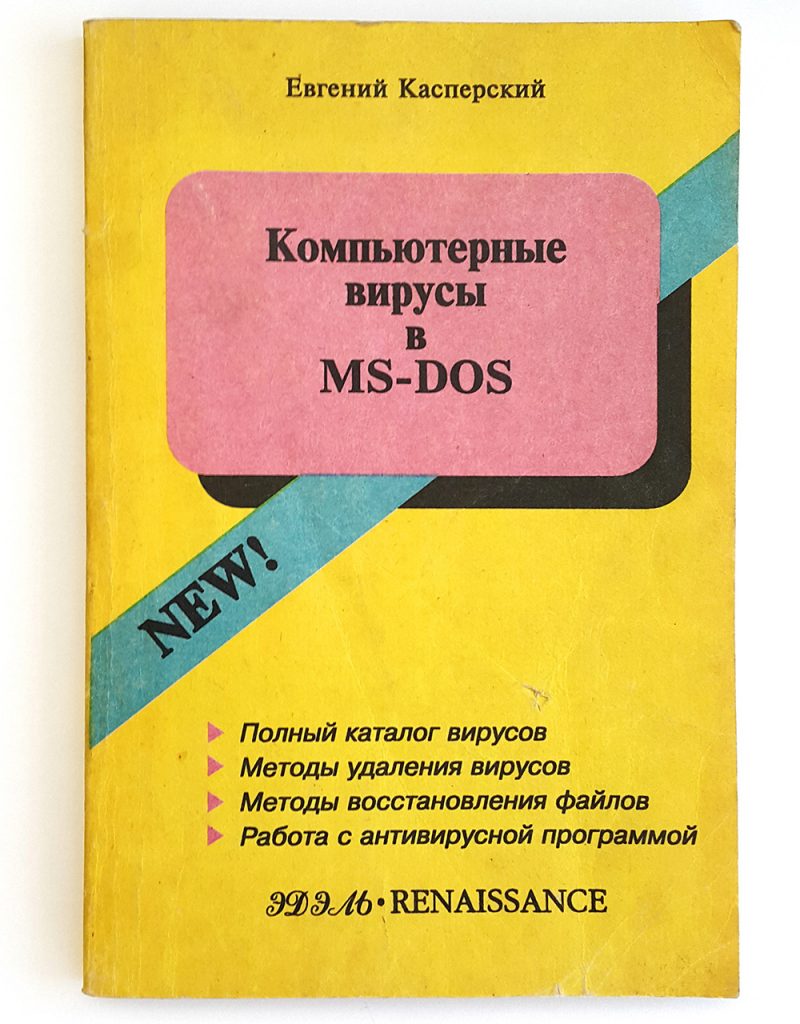

Quoted from “Computer viruses in MS-DOS” by Eugene Kaspersky, 1992. Page 102.

Disclaimer: this column reflects only the personal opinion of the author. It may coincide with Kaspersky Lab position, or it may not. Depends on luck.

Apple

Apple

Tips

Tips